On this post I’ll summarize how to expose web applications on EKS (or self managed Kubernets) in a fully automated AWS infrastructure environment, mentioning challenges together with solutions.

Let’s start with the default option we know from Kubernetes 101. In order to expose an application at Kubernetes, you should create a Service, with type LoadBalancer. This method is correct and provided directly by Kubernetes*.

*https://kubernetes.io/docs/concepts/services-networking/service/#publishing-services-service-types

This declaration will create an AWS Classic LoadBalancer and Kubernetes will collect load balancer endpoint, it’ll be something like this; 3456yhgfdswe45tygfder45–123453234.elb.eu-central-1.amazonaws.com

Although it’s super simple and straight forward, there are a couple of problems with this approach.

Governance

Who did create this load balancer? Is it part of IaC repository?

First of all, In a real micro-services environment, as you’ll have lots of public applications, when you start creating load balancer for each public applications, the AWS account will end up having lots of load balancers, without having control on implementing your infrastructure level security control mechanism. It’s expensive, because you’ll be paying per minute for each load balancer. From the automation and IaC point of view, you’ll have some services at your AWS environment, created outside of your infrastructure code.

As a result, creating load balancer for each service from Kubernetes is similar with serving your web project directly from a server, more than that, a new port for each domain.

SSL and Integrating with Web Application Firewall(WAF), CloudFront caching

After creating the load balancer, next task will be enabling SSL offloading at load balancer. Although it’s possible to offload SSL traffic at kubernetes service, we would rather to do it outside our cluster. Achieving this programatically will be challenging as your infrastructure as code repository is not aware of newly created load balancer.

Same reason will also cause other problem when it comes to integrating newly created load balancer with for example AWS WAF, again the code managing your infrastructure is not aware of new load balancer.

Last but not least, when we need to integrate load balancers with CloudFront for caching, we’ll have the similar problem.

Besides from all these technical challenges, as infrastructure provider, we don’t want developers to think about caching, SSL offloading, caching and isolation for each project but focusing on development.

Exposing Kubernetes Applications in Controlled Way

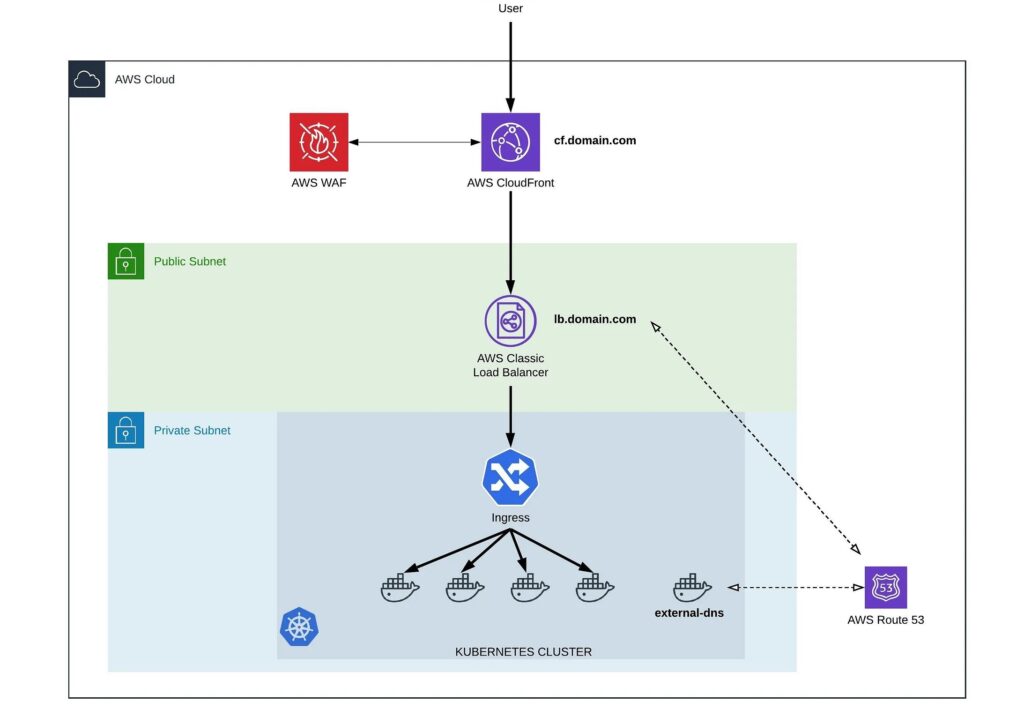

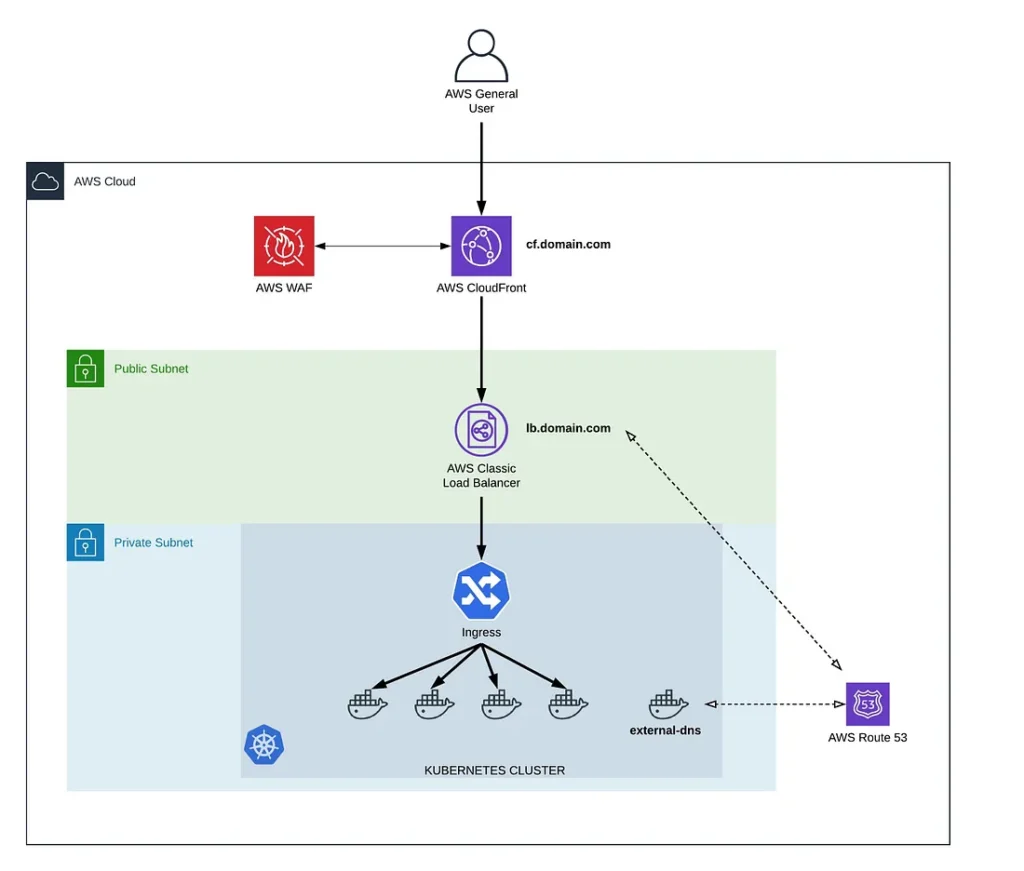

To address these challenges, we’ll configure a setup using;

- A CloudFront, to offload SSL traffic, create a static integration endpoint for WAF service, isolating Kubernetes resources from outside world and caching

- Single Classing Load balancer to expose all public applications, using Kubernetes Service resource (type: LoadBalancer)

- Kubernetes external-dns project, together with route53, in order to have a static endpoint CNAME record for Kubernetes created load balancer, it’ll be used to direct traffic from CloudFront to Kubernetes managed AWS Classic Load Balancer.

- Nginx Ingress Controller to re-distribute traffic inside Kubernetes

With this setup, any new service defined inside Kubernetes will be behind CloudFront and WAF, SSL is configured and managed from one point, if needed, whitelisting and blacklisting can be done from one location and managed by IaC repository. So from the governance point of view, we don’t need to check security requirements, SSL configuration etc separately for each service.

Architecture Diagram

*On this post we’re assuming that there’s already a running Kubernetes Cluster inside AWS with kubectl configured.

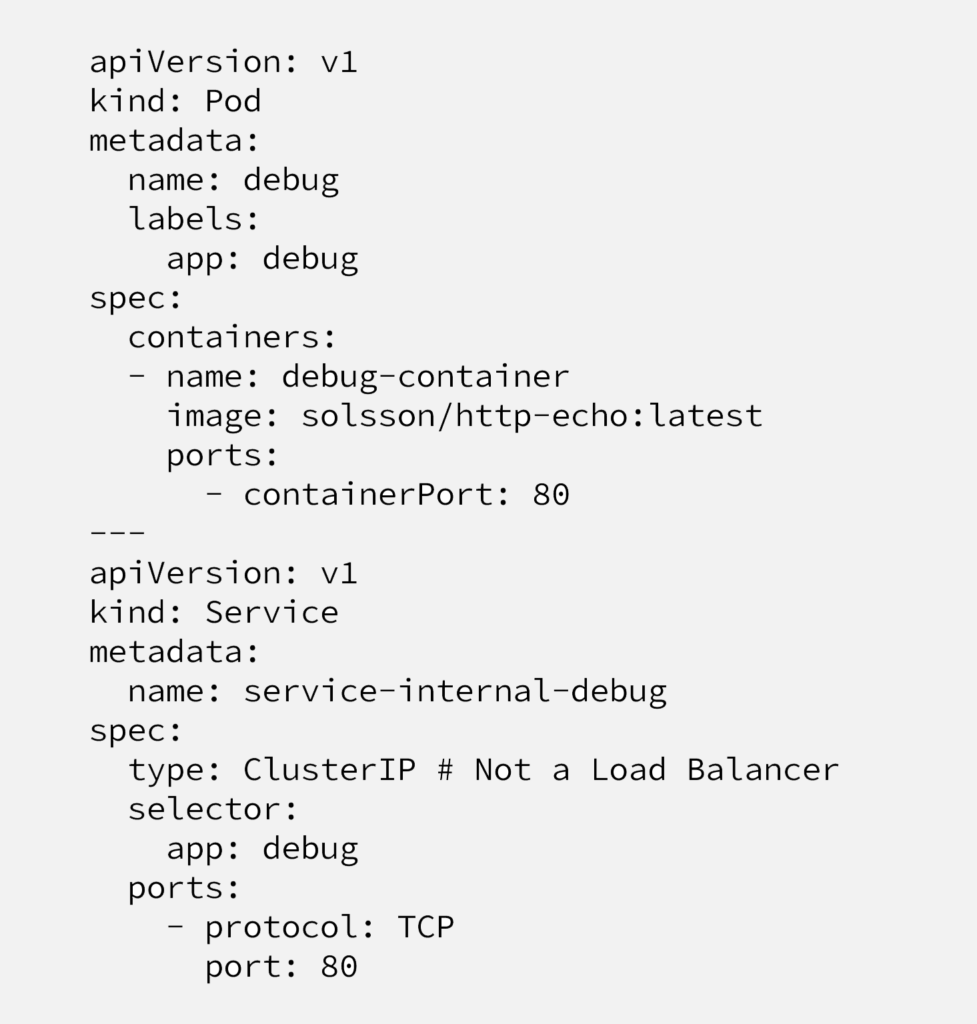

1. Pod and Service

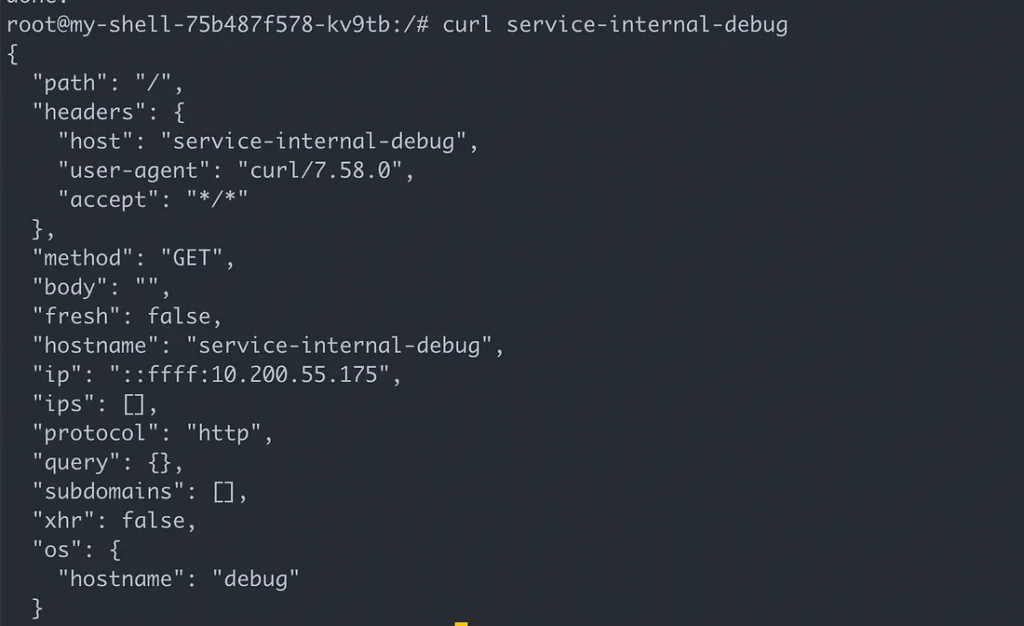

First step will be creating a simple Pod and Service to be able to test our setup. Below code will create a pod and inside it an application prints headers and hostname, any application prints an output will work. And second part creates a Service with type:ClusterIP , it’s accessible from inside the cluster. We’ll use this internal endpoint in order to connect from Nginx Ingress Controller.

Important to mention that, we’ll be creating “internal-service-app-name” services for each application, these services only expose applications internally, to be consumed by internal consumers like other applications or by Nginx Ingress Controller, for external consumers. The other service (Type: LoadBalancer) will be created only one time.

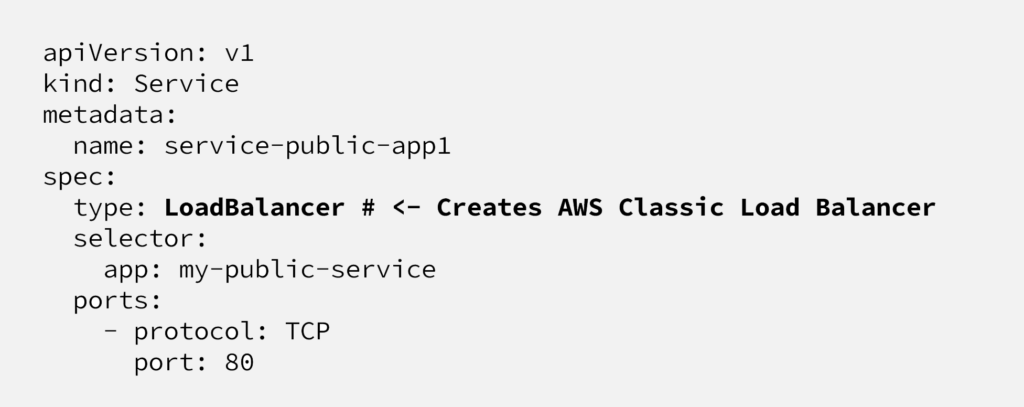

2. Load Balancer Service

Next step is creating a load balancer in order to create a single endpoint for external consumers and also an Nginx Ingress Controller in order to redistribute the traffic internally.

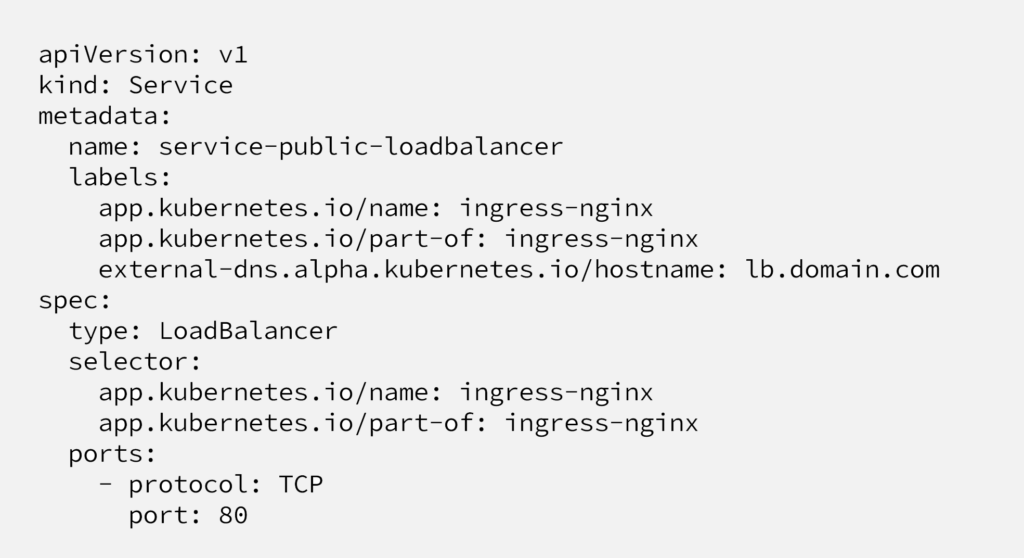

Below manifest will create an AWS Classic Load Balancer, highly recommended to visit Kubernetes official documentation for annotations and limitations. We intentionally create a Classic Load Balancer(default option) but not Network Load Balancer, because with Classic Load Balancer, it’s possible to adjust timeout, health check intervals etc.

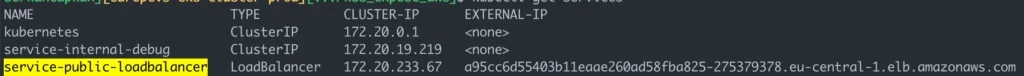

Here we create a new Service (type: LoadBalancer), it’ll be used as the only external endpoint to our cluster. As it can be seen at the screenshot, Kubernetes controller will ask AWS to create a Classic Load Balancer and show the real CNAME record pointing the load balancer, provided by AWS.

As mentioned before, the problem here is, AWS provides a random URL and other AWS services or our DNS provider is not aware of this record. We’ll solve this problem at the next section.

At selector part, we’re attaching this Service (load balancer) to a kubernetes application named “ingress-nginx”, which is not exist yet.

3. Nginx Ingress Controller

Nginx Ingress Controller is an ingress resource at Kubernetes, maintained by Kubernetes, provides proxying features in front of web applications. It deploys Nginx web server deployments with proxying configuration. Keep in mind that there are quiet some other ingress controllers, including another Nginx Ingress maintained by Nginx Inc.

On this post we won’t go through all nginx-ingress setup and configuration, it can be found here on this link. Only the part we’re interested in is the one below;

Nginx ingress deploys pods inside the cluster and the load balancer we’ve created (service-public-loadbalancer) directs traffic to these pods (thanks to the configuration at Service definition). Definition above redistribute the traffic based on hostname, URI, etc.

3. External DNS

Until now, we’re created a Kubernetes Service with Type: LoadBalancer, it created an AWS Load Balancer. Also at the same Service definition we’ve attached this service with Nginx Ingress Controller, in order to achieve content based traffic distribution.

Next step will be using a CloudFront for caching with WAF for implementing security rules. Or without using any of these resources, we may also decide to only redirect project.domain.com URL to our load balancer.

Challenge here is, Kubernetes Service defined AWS Load Balancer has a random URL, which will change when we delete the service and recreate, or through DTAP environments, it’s not part of CloudFormation or any other infrastructure management code repository or workflow. If you manage your DNS records, CloudFront, WAF etc with code, your code is not aware of this endpoint.

For example you don’t want to create a CNAME record manually like this and update it each time;

- project.domain.com -> a95cc6d55403b11eaae260ad58fba825–12312313.eu-central-1.elb.amazonaws.com

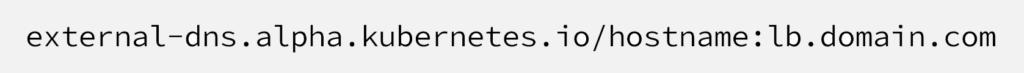

To tackle with this problem, we’ll use external-dns project, maintained by Kubernetes SIG. What external-dns does is simply deploying a Pod, which reads;

annotation at Service definition and creates CNAME records at Route53, pointing to your Kubernetes managed AWS Load Balancer. (external-dns also supports other Cloud Providers)

- lb.domain.com -> a95cc6d55403b11eaae260ad58fba825–12312313.eu-central-1.elb.amazonaws.com

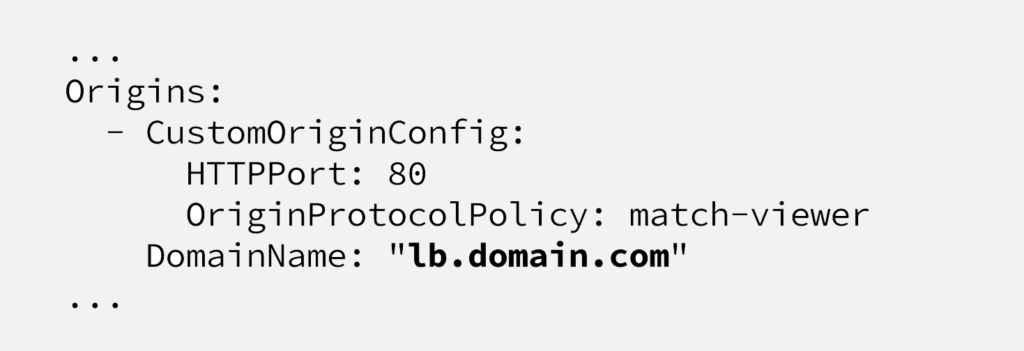

After this setup, you can use lb.domain.com CNAME endpoint as your CloudFront origin or directly create CNAME record for your web URL, pointing to this endpoint.

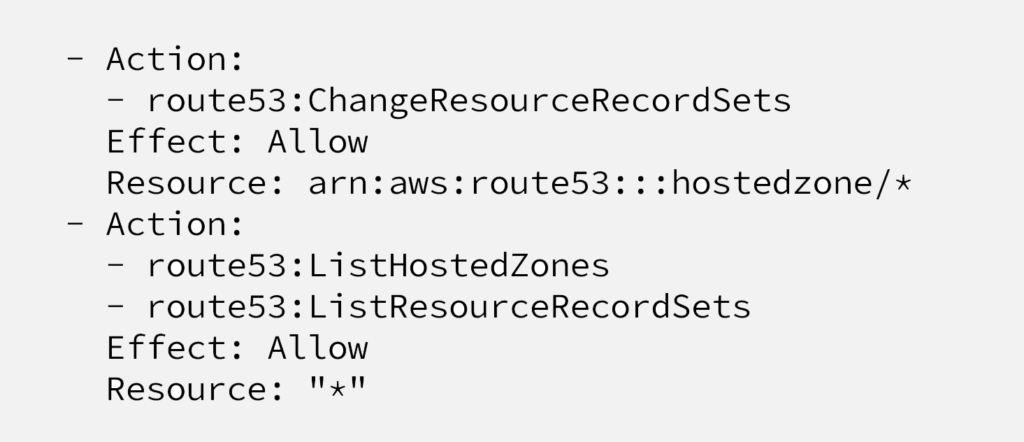

Keep in mind that, your worker nodes should have Route53 permissions below and you should have a domain name registered and managed by Route53. I would highly recommend registering a random domain at your AWS account, instead of letting your Kubernetes to touch your production domain.

4. CloudFront and WAF

Next and last step is configuring and attaching CloudFront and WAF. As mentioned before, it’s an optional but highly recommended setup, advantages are;

- adding an extra layer between Load Balancer and end users, so they won’t see Kubernetes created load balancer endpoint

- Caching some content (if needed)

- Integrating CloudFront with WAF and implementing security feature, for, well, security.

- Offloading SSL traffic at CloudFront

We won’t go through CloudFront and WAF configuration here in this post, but just to mention again, origin will be lb.domain.com endpoint, managed by external-dns project.

That’s it!

If you’ve any questions or comments, feel free to reach us out.